Mohamed Fazil

"Let us make this world a better place with Robots"

My technical experiences and awareness have been around the fields of Assistive technology, Robotics, Prototyping concepts and Deep Learning research. Beyond tech realm, I am passionate of travel and landscape photography.

My Bio

I have always been curious about unique tech applications and uncovering their potential.

In 2022, I started working as Robotics Engineer at Hello Robot Inc., dedicated to developing the innovative mobile robot platform 'Stretch.' This is a low-cost, lightweight mobile manipulator designed for safe human interaction and home use.

In 2021, I earned my Masters in Robotics from the University at Buffalo, US. I served as a Lab Tutor at the Digital Manufacturing Lab, providing advanced 3DP prototyping services and built reverse engineering courses for junior students. Simultaneously, I worked on deep learning research projects in the biomechanics field with motion capture tech, prosthesis controls, and VR at the Assistive Wearable Robotics Lab (AWEAR).

In 2019, I graduated with Bachelors in Mechanical Engineering from Vellore Institute of Technology, India. My experiences span mechanical design, mechatronics, fabrication, computer vision, and tech workshops. I'm proud to have won the University-level Best Project Award for two consecutive years.

Hello Robot's Stretch 2 (2022)

(credits: Hello Robot/Julian Mehu)

My Significant Works

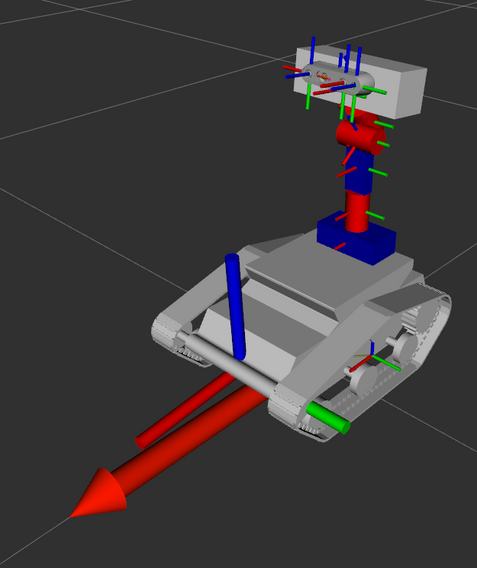

Autonomous Ground Vehicle with 3D Perception for Mapping / Multi-Object Pose Tracking

Tracked Differential Drive Robot + Jetson Nano + Realsense D435i + 3-DOF Manipulator

Built a ground robot for environment exploration, a 3-DOF manipulator with Realsense D435i RGB-D camera mounted on a Tracked differential drive mobile Robot fully controlled with ROS using Jetson Nano board. The Robot uses EKF localization fusing the wheel odometry with IMU(MPU6050) for state estimation. Real-Time Appearance based mapping (RTAB-Map) is used for building 3D Space/2D grid maps and localization. Scripted a ROS Multi-Object Tracker node that projects objects in 3D space and broadcasts it to the main TF tree. Built an organized repo of ROS packages for the Robot’s Configuration, Control, Perception and Navigation

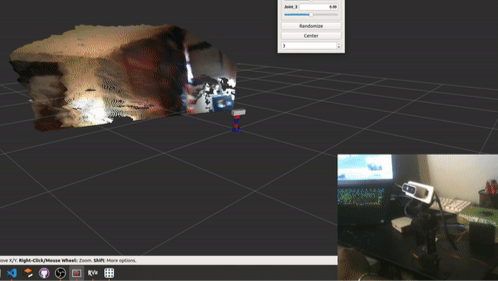

3-DOF Desktop Robot for Autonomous Object Tracking in 3D space

3-DOF Manipulator + Intel RealSense D435i + ROS (GitHub)

Adding 3D perception applications to a desktop 3-DOF robot initially designed to mimic the human head’s dexterity. This is a ROS package for Intel realsense D435i with a 3-DOF Manipulator robot that can be used for Indoor Mapping and localization of objects in the world frame with an added advantage of the robot's dexterity. The 3-DOF Manipulator is a self-built custom robot where the URDF with the depth sensor is included. The package covers the Rosserial communication with Arduino nodes to control the robot's Joint States and PCL pipelines required for autonomous mapping/Localization/Tracking of the objects in real-time.

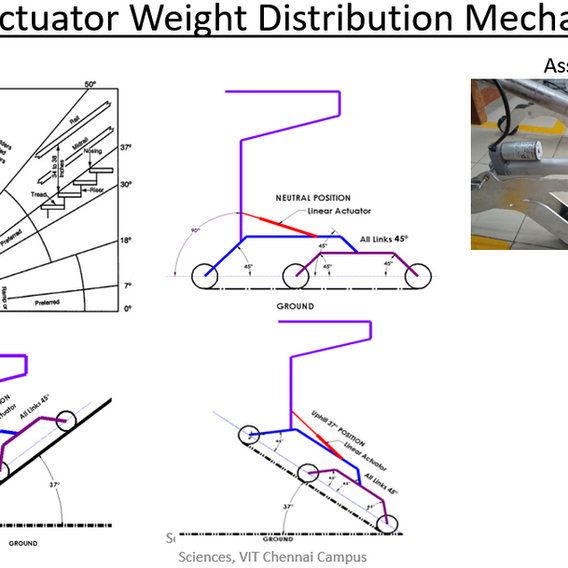

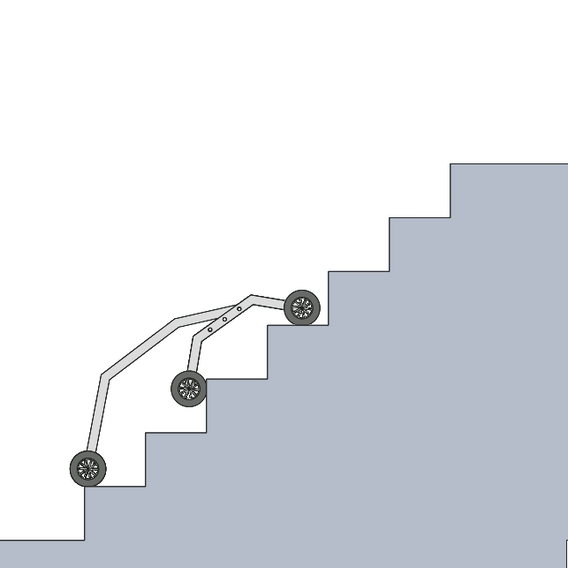

Smart Rollator with Rocker Bogie Suspension and IMU-Linear Actuators based weight distribution control

University Award Project, Mechanical Engineering Capstone Project (2019)

An advanced rollator(wheel walker) for Elderly and disabled people will help them walk on any obstacle-filled ground, go uphill and down the hill, and climb steps. Uses Rocker Bogie suspension design with linear actuators modifying the user's weight distribution over the rollator in different terrains. Developed unique mechanical design, a mechatronic system consists of linear actuators, Arduino Mega board, BLDC motors with feedback control, and IoT connectivity that can always be relied upon by the elderly. I am now looking forward to developing it as a Product.

Robotic Prosthesis Researcher - Deep Learning, Motion Capture, EMG, Virtual Reality

Researcher at Assistive Wearable Robotics Lab, UB (AWEAR)

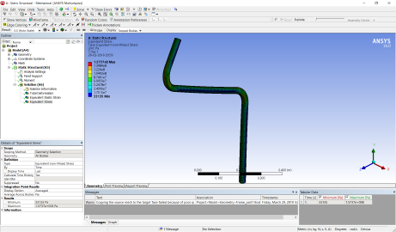

I finished my Master's research project on the title "Deep Learning approach to Robotic Prosthetic Wrist Control using EMG signals" under Professor Dr. Jiyeon Kang in Assistive Wearable Robotics Lab (AWEAR), where I designed experiments, collected forearm EMG signals, did 3D reconstruction from Motion capture system and applied Convolutional Neural Networks to predict angular velocities and classify wrist movements that can be implemented in a realtime controller. Previously worked on Myoware based prosthetic hook which involves EMG Signals, Imu Data, 3D reconstructions and kinematics, Euler Angles calculation of rigid body segments, develop Virtual Reality simulation in Mujoco physics engine, and Vicon Motion Capture System.

ROS Autonomous SLAM with Rapidly Exploring Random Tree (RRT)

Mobile Robotics and Lidar Perception (GitHub)

Developed a ROS package for Autonomous environment exploration using SLAM in a Gazebo environment which uses a Laser Range sensor to construct a real-world map at the same dynamically using Rapidly Exploring Random Tree algorithm. The robot uses ROS Navigation stack and RVIZ to visualize the perception of the robot in the environment. My Medium Story of this Project.

HapTap - The elderly haptics based monitoring device

Product design and Wearable device

The Haptap is an haptics based assistive device which consist of various sensors required for health monitoring and IMU to track the user as well as help them communicate using haptics . This is an IoT device that has its own standalone server and multi-device interface which can be used in any medical oriented environment that requires the users to be tracked without physically volunteering. The concept of Haptics was originally developed to help with people who are suffering from the condition 'Cerebral Palsy'. Iam currently looking forward to kickstarting this product and looking for investors.

Stereo Vision-based robot for Remote Monitoring with VR (Publication)

Published in Scopus indexed IJEAT Journal

With the help of stereovision and machine learning, it will be able to mimic human-like visual systems and behavior towards the

environment. In this paper, we present a stereo vision-based 3-DOF robot which will be used to monitor places from remote using cloud server and internet devices. The 3-DOF robot will

transmit human-like head movements, i.e., yaw, pitch, roll and produce 3D stereoscopic video and stream it in real-time. This video stream is sent to the user through any generic internet

devices with VR box support, i.e., smartphones giving the user a First-person real-time 3D experience and transfers the head motion of the user to the robot also in Real-time.

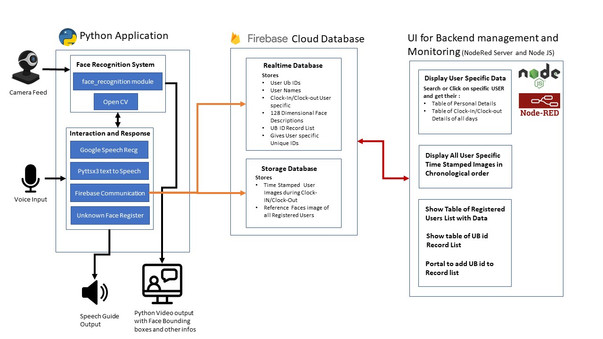

Touch-less Clock-Time System for University at Buffalo

Artificial Intelligence Institute, University at Buffalo

Touchless clock-in system Designed and deployed a web-based application in which uses face recognition with deep learning. The web application was built in Angular and deployed through Google Cloud Console with Python flask backend which also runs in a GCP Cloud Server. It uses Dlib deep learning library in python and Manages an unstructured database in the cloud through MongoDB. A completely touch-less user/employee attendance system for University at Buffalo. The prototype system consists of a Python interactive bot for the user to interact using voice inputs and guided voice outputs. The system stores all the user information with a 128-dimensional face descriptor created for individual faces. A back-end monitoring web interface was also created using NodeRed.

Awards and Certification

Twice Best Project University Award Winner

2018-2019

Vellore Institute of Technology, May 2019

Received the "Best Project Award" twice consecutive semesters for my projects "The Smart Rollator for Elderly" and "The HapTap"

IoT Challenge Winner - Pragyan 2017

National Institute of Technology, Trichy, March 2017

Me and my teammates together won the National Level IOT Challenge conducted by NIT Trichy, 2017 where we developed a IOT based laborer Protective suit which can be used to monitor their health conditions when working in hazardous environments.

International Accessibility Summit 2017 - Delegate

Indian Institute of Technology, Madras, January 2017

Was selected and attended the International Accessibility of 2017 organized by the Indian Institute of Technology, Madras which was also attended by world-renowned scientists and policymakers on the field of Assistive Technology. Presented my Modul-i product concept to the panelists.